Understanding where you’re at in capturing and using data is a good way to build a road map to the future.

Two years ago, the president of Primus Builders knew he had a data-analysis problem, but he had no idea how big that problem actually was. All he really knew was that none of his tech solutions were “talking” to one another, leaving him with islands of data and redundant workarounds. Then he called in an expert and realized his problem was much worse.

That’s because Primus Builders was where a lot of construction firms are on their data-analysis journey — siloed point solutions. In practice, that meant the company was wasting money on technology it no longer used — and hadn’t been for years — without anyone realizing it. It also meant workers were duplicating efforts to produce reports, but the information in those reports often conflicted because there was no single source of truth. Finally, it meant the company was at risk for hacking, ransomware attacks and other security breaches.

“The problem that a lot of people have is how do I get these applications to talk? How do I take my financial data and combine it with my schedule and my project management? There’s not really a clean way to do it,” said John Robba, chief technology officer for Primus Builders. “But collecting data is one thing. The real question is, what do you do with it? And that’s usually the hardest thing for companies to answer. So, the first thing is knowing what’s the goal you’re trying to accomplish? Then you can back into the details, and it will be very apparent if you selected the right partnerships and have the right internal resources to pull that off.”

Before any of that happens though, Robba and others say it’s important to have a clear understanding of where your company is in its data-analysis journey — and how to move forward.

But for commercial construction firms looking to start a data-analysis journey — and capitalize on the promise of data analytics—the path is anything but clear. FMI reports that 96% of data collected in the engineering and construction industry goes unused.

That’s a big mistake.

“The organizations that take the time to gather the data, analyze it and turn it into actionable insights will gain a competitive advantage. The ones that bury their heads in the sand and hope it goes away will be quickly left behind,” warn the FMI report experts.

For many firms, there are more questions than answers on how to begin analyzing and using data. A good place to start is understanding where you are in this process. Here’s a high-level look at three states of progression in the journey toward data analysis most firms go through that can serve as a road map to help you move forward.

Understanding Three Basic Approaches to Data Analysis — and Where You’re at on Your Journey

Step 1: Data Collected in siloed point solutions

What it looks like: Companies in this scenario typically use multiple apps and tech tools to gather data. Although processes are being digitized, the apps and tools don’t integrate with one another, and thus, data becomes siloed in the various solutions. That means that to get a report, someone has to manually go into the different tools or apps and export the data. In fact, 42% of companies use four to six apps on their construction jobs, and 27% report that none of those apps integrate with one another. As a result, data is transferred manually nearly 50% of the time, according to the 2020 JBKnowledge Construction Technology Report.

Why it creates problems: It’s not just the siloing and manual data transfer that are problems. Using multiple apps and tools also means workers have to sign in and navigate multiple passwords, interfaces and hardware. Because of that friction, workers sometimes simply don’t use the tools at all. Even if there is consistent use of the tech tools, someone ends up spending a lot of time trying to figure out if the data is consistent and updated. Answering the question, “What happened on the job site today?” becomes a data wrangling challenge, instead of a quick and easy answer.

How to move forward: Rather than manually pulling data from individual apps to run reports, companies can begin using a data warehouse or data lake to pull in data from the various apps and tools, which can then be used to run reports. This data integration can be almost instantaneous and automatic. But these products and services can also be pricey. Another option for firms with capable IT departments is to create an in-house data warehouse using services such as Amazon Web Services. Either way, companies can start small with incremental steps and grow from there.

Step 2: Arguments over conflicting data: “Who’s right?”

What it looks like: Just as it sounds, this scenario finds two or more workers arguing over whose data is correct. For example, the field might have a different data point for hours worked than the office. Companies in this stage often have started to integrate and automate data capture, but they haven’t yet developed systems and approaches to ensure a single source of truth.

Why it creates problems: Without that single source of truth, trust in the system is lost, and buy-in from executives in the C-suite, all the way down to the field workers, is lost with it. When that happens, even the best tech tools and integration won’t produce useful data because people aren’t taking the time to input it. That’s more than just academic. Fully 52% of rework is caused by poor project data and miscommunication, meaning $31.3 billion in rework was caused by poor project data and miscommunication in the U.S. alone in 2018, according to an FMI/Autodesk report.

How to move forward: The solution to this problem is something called metadata — a set of information that describes and gives information about other data. In practice, this means that definitions must be provided for data in the user interface or dashboard. For example, what is the specific definition for hours worked? This question may seem obvious, but without clear definitions, workers may make assumptions that corrupt the data. Once data is defined with metadata, companies must implement a data-documentation system that verifies where the data is coming from and how it gets input. This process is known as data lineage. Data lineage includes the data origin, what happens to it and where it moves over time. Data lineage provides visibility into data and simplifies the ability to trace errors back to the root cause.

Step 3: Enterprise-wide data accessibility

What it looks like: Companies in this phase of the data-analysis journey can run data reports and view data dashboards quickly and easily. The data is secure and reliable, and there is a trusted single source of truth. That in turn allows companies to use different tools that they wouldn’t otherwise because all the inputs are readily available. For example, with BIM, changes can be made in a 3D render using real-time job site data. That means if an architect or owner wants to make a change, a cost analysis can not only be done in real time, but also based on how your project’s going at that point in time. And most importantly, there is a clear process for resolving discrepancies. The best companies use a combination of documentation, monitoring tools, and a dedicated support team to maintain data accuracy, but also allow stakeholders to avoid making judgements on inaccurate data.

Why it works: Because these companies see real-time data, they can use that insight to make actionable decisions about day-to-day operations that lead to greater efficiency and profitability. Questions that used to take hours or days to get answers can now be cleared up in seconds. This ability allows companies to be more aggressive and creative with different building scenarios that lead to still greater efficiency and cost savings. More time gets spent on high-value efforts rather than trying to find and massage data. Everyone in the organization is able to confidently and accurately answer the question, “What happened on the job site today?” The benefits of being able to answer that question (and others like it) are obvious to most contractors. In fact, 70% of contractors believe that advanced technologies can increase productivity (78%), improve schedule (75%) and enhance safety (79%), according to the 2019 USG + U.S. Chamber of Commerce Commercial Construction Index.

How to fully capitalize on it: Companies that have moved into this realm haven’t had to reinvent the wheel. Most other industries have made headway on how to move to enterprise-wide data accessibility, and a number of tools, services and products exist to help. Like Primus Builders, these companies have also prioritized data analysis by hiring experts to help them untangle the different apps and tools they use and develop a cohesive data-analysis strategy. No one tool or hire will solve this problem; it has to be an organization-wide initiative that plays to everyone’s strengths.

Moving Forward on Your Journey

Clearly, enterprise-wide data accessibility could benefit all contractors. But getting there requires a new way of thinking about building — and knowing when and from whom to ask for help along the way. The good news is that contractors don’t have to figure it all out immediately. They can move in the direction of enterprise-wide data accessibility in incremental steps. They can also look to other industries and experts for support and guidance.

The most important thing, though, is to start moving in the direction of enterprise-wide data accessibility — before it’s too late. Some topics to research moving forward:

- Hiring a data team.

- Understanding where data came from with data lineage.

- Understanding why data may appear inaccurate with data observability.

- Understanding how data is defined with a data dictionary.

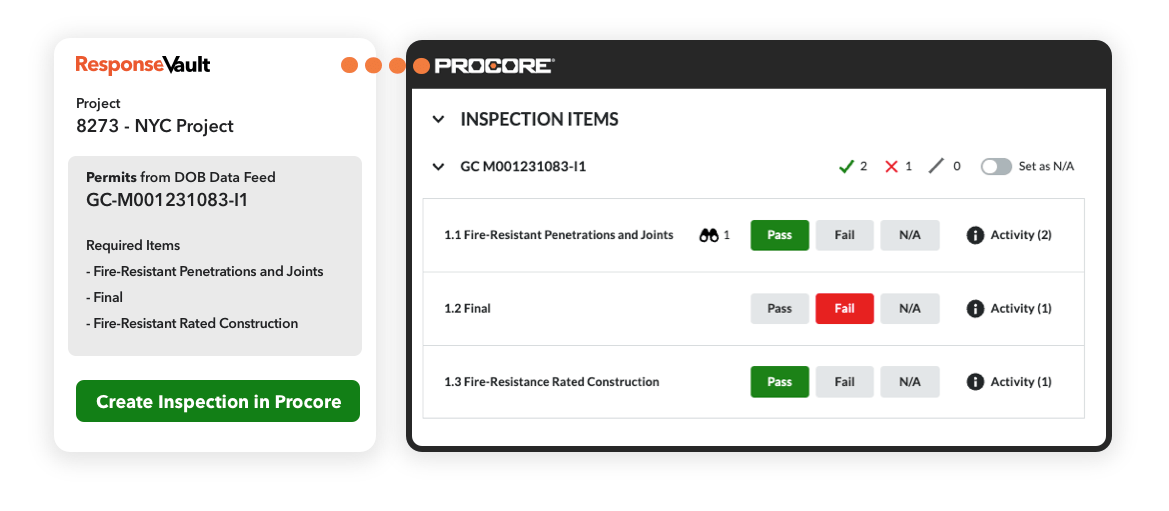

“We’ve seen many customers come in with pieces of the puzzle, but they need help with staffing and tooling to put the full picture together,” explained Matt Monihan, CEO of ResponseVault, a data engineering firm that specializes in the construction industry.

Ultimately, experts like Robba warned getting that full picture is becoming more and more vital to not only remaining competitive, but also surviving.

“Wake up, because you’re already living the nightmare. You’re already in the weeds, you just don’t know how tall the weeds are or even where the road is, or how far it is to the road to get out of the weeds,” Robba said. “Most people running organizations are going a million miles an hour. And data analytics is one of those things where you have to have the expertise. And then you actually have to take the time to sit down and go, ‘Yes, I’m going to focus on this and put an action plan together.’ But the bottom line is, you need to invest in your future now, because your competition definitely is.”

You must be logged in to post a comment.